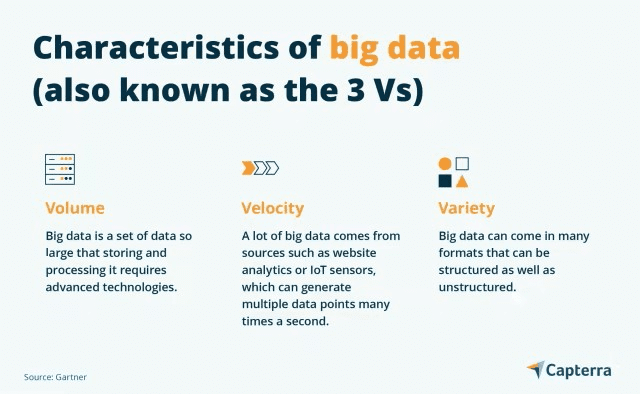

Problems with big data are related to the three important V’s: Volume, Velocity, and Variety.

According to some experts at Capterra, even though big data has become a game changer these days, with 97% of the companies investing in big data technology, there are big data challenges that need to be mitigated on priority.

As for the challenges for big data V’s, they revolve around:

- Volume Management: Having sufficient technology to store, sort, and retrieve large chunks of data – and that too, in bulk.

- Velocity: Since all this data comes through multiple sources, i.e. IoT, analytics, archives, etc., the velocity of multiple-point data entry needs to be subjugated with amicable solutions.

- Variety: No two big data clusters could be the same. One of the major big data challenges is formatting data in a structured style so that it could be represented in the form of an easily interpretable report.

However, when we delve deeper into challenges for big data, many sub-level constraints need to be addressed.

In this post, we’ll talk about different aspects of problems with big data, issues with big data that can be resolved through technological solutions, and different use case scenarios where these challenges for big data are overcome.

Let’s get started.

Why Challenges for Big Data Are Hard To Overcome?

Challenges for big data are hard to overcome for different reasons. Velocity, Volume, and Variety aside, there are different issues with big data that come in the form of technical incapacities.

Some of the reasons are stated below:

Veracity:

Big Data sources may contain noise, inaccuracies, and inconsistencies, making it challenging to ensure data quality and reliability.

Cleaning, validating, and ensuring the accuracy of Big Data can be complex and time-consuming, especially when dealing with large datasets.

Variety of Data Sources:

Big Data originates from multiple sources, both internal and external to an organization.

Integrating data from various systems, applications, and platforms can be difficult, requiring data integration frameworks, APIs, and data governance practices to ensure seamless data flow and consistency.

Scalability:

Big Data solutions need to scale horizontally to accommodate the growing data volume and user demands. Scaling up traditional systems to handle

Big Data can be costly and complex, often requiring distributed computing architectures and specialized technologies like Hadoop or Spark.

Privacy and Security:

Big Data often contains sensitive information, raising concerns about privacy, security, and compliance.

Safeguarding data privacy, protecting against unauthorized access, and ensuring compliance with regulations like GDPR or HIPAA pose significant challenges in Big Data environments.

Analytics and Insights:

Extracting meaningful insights from Big Data requires advanced analytics techniques and tools.

Analyzing large, complex datasets to discover patterns, correlations, and actionable insights may necessitate sophisticated algorithms, machine learning, or artificial intelligence approaches.

Talent and Expertise:

The field of Big Data requires specialized skills and expertise in data engineering, data science, and analytics. Finding and retaining professionals with the necessary knowledge and experience to work with Big Data can be challenging due to the demand for such skills and the rapid evolution of the field.

Cost:

Implementing and maintaining Big Data infrastructure, tools, and technologies can be costly. The investment required for hardware, software, storage, and skilled personnel can be substantial, especially for smaller organizations with limited resources.

Addressing these challenges often involves a combination of technical solutions, process improvements, talent development, and a strategic approach to Big Data management.

It requires organizations to carefully plan, invest in the right technologies, and continuously adapt to the evolving Big Data landscape.

Top 5 Issues with Big Data and How to Solve Them?

Any number of issues with big data can be overcome with the help of the right expertise and technology.

You may have heard the phrase: “Rome wasn’t built in a day.”

Rest assured, with each new technology and data discovery process, there are always challenges out there. It’s a naturally occurring phenomenon.

As a new business owner, you might run into the following challenges for big data.

- Big Data Volume Management Issues

Managing massive amounts of data poses several challenges due to the volume, variety, velocity, and veracity of the data.

Here’s why it can be problematic and how modern technology helps handle it:

Storage and Infrastructure:

Storing and managing massive amounts of data requires robust infrastructure capable of handling the scale and performance requirements.

Traditional storage systems may not be sufficient, leading to issues such as data fragmentation, limited scalability, and increased costs.

Modern technologies, like distributed file systems (e.g., Hadoop Distributed File System) and cloud storage, provide scalable and cost-effective solutions for storing large datasets.

Data Integration:

When dealing with massive amounts of data, integration becomes complex, especially when data is sourced from multiple systems, formats, and locations.

Modern technologies like data integration platforms, extract-transform-load (ETL) tools, and data virtualization help streamline and automate the process of integrating data from various sources, enabling data consolidation and easy access.

Data Processing:

Traditional data processing systems may struggle to handle the volume and complexity of massive datasets.

Modern technologies like distributed computing frameworks (e.g., Apache Spark) and parallel processing enable efficient processing of large-scale data by distributing the workload across multiple nodes or clusters. These technologies allow for faster data processing and analytics, even on vast datasets.

Real-Time Processing:

Managing massive amounts of data in real-time or near real-time is crucial for time-sensitive applications and decision-making.

Modern technologies like stream processing frameworks (e.g., Apache Kafka, Apache Flink) enable the ingestion, processing, and analysis of streaming data at scale, providing real-time insights and actions.

Data Governance and Quality:

Ensuring data quality and governance becomes more challenging as data volume increases.

Modern data governance tools and practices help establish data quality standards, implement data policies, and monitor data lineage and usage.

Data cleansing and data profiling tools assist in maintaining data accuracy and consistency within large datasets.

- Multi-Point Data Integration

Getting the data from multiple touchpoints is the “easy part.”

Integrating it into an understandable format is another thing. Fortunately, here are some of the suggestions that’ll help you to handle these issues with big data as far as flawless integration is concerned.

Define Data Integration Requirements:

Start by clearly defining the integration requirements, including the purpose of integration, data sources involved, desired outcomes, and the level of data granularity needed.

Understanding the specific integration goals helps in devising an appropriate integration strategy.

Data Standardization and Data Governance:

Establish data standards and governance practices to ensure consistency and compatibility across different data sources.

This involves defining data models, formats, naming conventions, and data quality rules. Implementing a data governance framework ensures that data is accurate, complete, and consistent across the integrated sources.

Data Integration Tools and Platforms:

Utilize data integration tools and platforms that provide features like data mapping, transformation, and workflow orchestration.

These tools facilitate the extraction, transformation, and loading (ETL) processes required to integrate data from various sources.

Commonly used tools include Informatica PowerCenter, Talend, and Apache Nifi.

Data Virtualization:

Data virtualization is an approach that allows accessing and querying data from multiple sources without physically moving or duplicating it.

With data virtualization, you can create a logical view of integrated data, providing real-time access and reducing the complexity of physical data integration. Tools like Denodo and Red Hat JBoss Data Virtualization enable data virtualization capabilities.

Application Programming Interfaces (APIs):

Utilize APIs to facilitate data integration between systems. APIs provide a standardized and structured way of exchanging data and functionalities between applications or services.

When available, using APIs simplifies data integration by leveraging predefined endpoints and data formats, enabling seamless communication between different systems.

Extract-Transform-Load (ETL) Processes:

ETL processes involve extracting data from various sources, transforming it into a common format, and loading it into a target system or data warehouse.

ETL tools and frameworks, such as Apache Spark, Apache Airflow, or Microsoft SQL Server Integration Services (SSIS), are commonly used to automate and streamline these processes.

- Data Quality Assurance and Maintenance

One of the big data challenges is denoising and decluttering data clusters. Since big data brings in a sheer value of data sets, not all of it is useful.

Or, there could be a possibility that not all those data clusters could have the same meaningful priority, as with a select few.

Here’s how you can overcome these challenges for big data from a quality maintenance and decluttering perspective.

Incomplete and Inaccurate Data:

Big data sources may contain incomplete or inaccurate information due to data entry errors, system glitches, or inconsistent data collection processes.

The overwhelming volume and velocity of big data make it more susceptible to data quality issues, making it harder to identify and rectify errors.

Lack of Data Standardization:

Big data often lack standardized formats, definitions, and metadata, making it challenging to establish common data quality rules and measures across different datasets.

This lack of standardization makes it harder to compare, validate, and integrate disparate data sources effectively.

Limited Data Profiling and Cleansing Tools:

Traditional data profiling and cleansing tools may not scale well to handle the volume and complexity of big data. Analyzing and cleaning massive datasets require specialized tools and techniques capable of efficiently processing and validating large volumes of data.

To overcome these problems with big data quality, organizations can consider the following strategies:

Define Data Quality Objectives:

Clearly define data quality objectives and metrics based on the specific requirements and use cases. Establish criteria for completeness, accuracy, consistency, timeliness, and relevance of the data to ensure alignment with business goals and user expectations.

Implement Data Quality Processes:

Establish robust data quality processes that cover data profiling, validation, cleansing, and enrichment.

Leverage big data quality tools that can handle the volume and complexity of big data. Automated data quality checks and continuous monitoring can help identify and rectify quality issues in real time.

Data Standardization and Governance:

Establish data standardization practices and governance frameworks to ensure consistency across different datasets.

Define data models, naming conventions, and metadata standards to improve data interoperability and facilitate data integration and analysis.

Data Cleansing and Enrichment:

Implement data cleansing techniques to identify and correct errors, inconsistencies, and duplicates in the data. Data enrichment methods, such as data augmentation with external sources or leveraging machine learning algorithms, can enhance the quality and completeness of big data.

Data Lineage and Auditing:

Establish data lineage tracking and auditing mechanisms to trace the origin, transformation, and usage of big data.

This helps in understanding the data flow, identifying potential quality issues, and ensuring data accountability and compliance.

- Selection of Big Data Management Tools

Selecting the right big data management tools can be a challenge due to the following reasons:

Rapidly Evolving Landscape:

The big data management landscape is rapidly evolving, with new tools and technologies emerging regularly.

Keeping up with the latest advancements and understanding which tools best suit specific use cases can be challenging.

Diverse Tool Ecosystem:

There is a wide range of big data management tools available, each catering to different aspects of the data management lifecycle, such as data ingestion, storage, processing, analytics, and visualization.

Navigating this diverse tool ecosystem and identifying the right combination of tools for a specific requirement can be complex.

Scalability and Performance:

Big data management involves handling large volumes of data, and tools need to be scalable and performant to handle the workload effectively.

Choosing tools that can scale horizontally and deliver high performance is crucial for managing big data efficiently.

Integration Challenges:

Integrating different big data management tools and ensuring interoperability can be challenging.

Organizations need to consider how the chosen tools will integrate with existing systems, data sources, and other tools in their technology stack.

Cost and Budget Considerations:

Big data management tools can vary widely in terms of cost and licensing models.

Determining the budget and aligning it with the capabilities and value offered by different tools can be a complex decision-making process.

To overcome these tools-related issues with big data management tools, organizations can consider the following solutions:

- Clearly Define Requirements:

Start by clearly defining the specific requirements and use cases for big data management.

Understand the types of data, processing needs, scalability requirements, and desired outcomes. This helps in identifying the tool functionalities needed to address those requirements effectively.

- Conduct Proof of Concepts (POCs):

Perform proof of concepts or pilot projects to evaluate different big data management tools.

Select a representative sample of tools and assess their performance, scalability, ease of use and compatibility with your data sources and existing infrastructure. POCs can provide hands-on experience and help in making informed decisions.

- Leverage Vendor Support and Documentation:

Engage with vendors and leverage their support and documentation resources.

Seek guidance from the vendors in understanding how their tools can address your specific requirements. Vendor support and expertise can assist in narrowing down the options and selecting the most suitable tools.

- Evaluate Open-Source Solutions:

Consider open-source big data management tools, which often provide flexibility, customization options, and cost savings.

Evaluate popular open-source projects like Apache Hadoop, Apache Spark, Apache Kafka, and Apache Flink, as well as the ecosystem of related tools around them.

- Community and Industry Recommendations:

Engage with the big data community and industry experts to gather insights and recommendations on suitable tools.

Attend conferences, join forums, and participate in online communities where professionals share their experiences and recommendations.

- Resistance from Organizational Members

Of course, with any new technology, or workflow change, there’s a certain degree of resistance to be expected.

Sometimes, team members are too used to existing tools, and don’t give way to innovation easily. This is one of those indirect issues with big data that need to be resolved.

Laying off these employees is the last thing to do. But, here’s why and how this problem with big data can be resolved.

- Fear of Change:

Resistance often stems from the fear of change. Introducing big data solutions may disrupt existing workflows, roles, and responsibilities, creating uncertainty among employees.

They may worry about their job security, loss of control, or the need to learn new skills.

- Solution:

Address the fear of change through effective change management strategies.

Clearly communicate the benefits of big data solutions, how they align with organizational goals, and the opportunities they create for personal growth and development.

Involve employees early in the process, provide training and support, and emphasize the positive impact on their work and the organization as a whole.

- Lack of Awareness or Understanding:

Resistance may arise when organizational members do not have a clear understanding of big data and its potential benefits.

They may be skeptical about its value and impact on their day-to-day work.

- Solution:

Conduct educational and awareness programs to enhance understanding and awareness of big data.

Explain the value proposition, potential use cases, and success stories from similar organizations.

Provide training sessions through technology champions, and conduct workshops or webinars to help employees grasp the concepts, benefits, and practical applications of big data solutions.

- Threat to Existing Power Dynamics:

Big data solutions often enable data-driven decision-making, which may challenge existing power dynamics or hierarchies within the organization.

Individuals who hold influence or decision-making authority may resist changes that could shift the balance of power.

- Solution:

Engage with key stakeholders and decision-makers early in the process. Involve them in the planning and decision-making stages to address their concerns, gain their support, and ensure that their perspectives are considered.

Emphasize how big data solutions can enhance decision-making processes and enable more efficient and effective outcomes.

- Perceived Loss of Control:

Some employees may feel that big data solutions will take away their control over data or decision-making processes. They may resist the idea of relying on data-driven insights rather than their intuition or experience.

- Solution:

Foster a culture of data literacy and data-driven decision-making.

Educate employees on how big data solutions complement their expertise and empower them to make more informed decisions. Emphasize that big data solutions are tools to enhance decision-making, not replace human judgment.

Provide opportunities for employees to contribute their domain expertise to the data analysis and interpretation process.

- Data Privacy and Security Concerns:

Integrating big data solutions may raise concerns about data privacy and security among employees. They may worry about the misuse or mishandling of sensitive information.

- Solution:

Communicate the measures and protocols in place to ensure data privacy and security.

Outline the compliance measures, data governance policies, and encryption techniques used to protect sensitive data.

Involve the IT and security teams to address specific concerns and provide assurances regarding data protection.

- Lack of Trust in the Data:

Employees may resist big data solutions if they lack trust in the quality or accuracy of the data. They may question the validity of insights derived from the data.

- Solution:

Establish data quality processes and ensure transparency in data collection, processing, and analysis. Implement data governance practices and provide visibility into the data lineage and data quality controls.

Encourage a culture of data transparency and open dialogue, where employees can raise concerns or provide feedback regarding data quality issues.

Addressing resistance requires a holistic approach that combines effective communication, education, stakeholder involvement, and addressing specific concerns.

Conclusion

Eventually, it’s a matter of perceiving data challenges and devising different mock scenarios where these issues with big data can be resolved. Should you find yourself in a predicament that undervalues your approach to overcoming challenges for big data, feel free to reach out to the experts at Blue Zorro.

We’d be more than happy to create a big data issue resolution roadmap for your company and help you to implement it as a top priority.